It’s not alive

Thanks to OZ friend-of-the-family Dr. Michael Mulvey for sharing this column about embodied cognition and ChatGPT.

The Deep Metaphors that we use in our ZMET research are based on embodied cognition. We learn about a concept such as Balance from our very earliest days when we sit up, crawl, walk, and, eventually, run. We then use that embodied concept of “balance” to make sense of new and more abstract things that we encounter throughout life – such as the justice system, human relationships, even personalities and emotions (e.g. one person can be “level-headed” while another person can be “head over heels” in love.”)

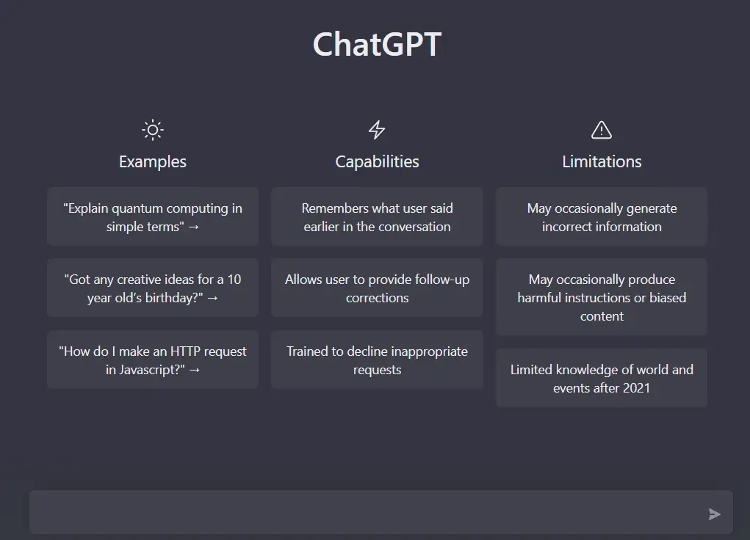

The authors of this commentary have run experiments in which computer models of language are asked how to perform various innovative tasks. For example, if you were trying to fan a fire, would you use a stone or a paper map? ChatGPT and other computer language models struggle mightily to answer these kinds of questions correctly.

The professors suggest this is because they don’t have bodies and therefore don’t really understand what they are saying. They are good at learning rules and patterns embedded in human language, but they don’t understand what those words really mean because they don’t have experience navigating through the physical world with their senses and with any sense of physical or emotional purpose.

Our bodies shape the language we use and how we see the world. As the fascinating video embedded in the article notes, if we were spherical creatures with eyes on all sides of our bodies, we wouldn’t understand the notion of forward and backward, which would affect (among other things) how we think about time – which we often describe using “forward” and “backward” metaphors.

Although ChatGPT is immensely powerful at making sense of language patterns and culling through the vast amount of information available on the internet, it is not “thinking” and it is not sentient.

Entering a probe in ChatGPT is no replacement for good market research, where we are exploring the connection between metaphors and unconscious thinking. Those types of insights will not be available online (because they are unconscious) and also because computer language models don’t have bodies and emotions that help them navigate the world.